Recently, the proliferation of servers (both real and virtual) has meant that a good hour or so of my weekend must be spent patching. I would rather not have to do that and will be looking to move to Landscape to do that for me. Stay tuned.

Author Archives: wargus

Configuring Mattermost on Ubuntu 16.04 with Apache, Mysql and Let’s Encrypt

First some background:

I’ve become increasingly aware of ‘free’ services like Slack (but facebook and Google also fit into this category). While they do offer free  convenient services, the true cost is to your privacy and security. Having some technical knowledge means that I can have the convenience and features of their platforms without having to sacrifice any of the above – it’s the best of both worlds!

convenient services, the true cost is to your privacy and security. Having some technical knowledge means that I can have the convenience and features of their platforms without having to sacrifice any of the above – it’s the best of both worlds!

My notes below will only cover some of the more difficult aspects of configuring apache and how I circumvented let’s encrypt’s process of creating and accessing hidden directories. The Mattermost documentation is your friend too; their setup guides were accurate and effective. To anyone reading this: I strongly suggest setting up a test server first before attempting to create a production system from scratch.

Setting up Mattermost:

As stated above, documentation is your friend. I set up the system on a test VM and was able to get it running with minimal fuss. I created a mysql user and database for the installation on my production web server using phpmyadmin and from there, the rest of the configuration was from within Mattermost itself. Mattermost encourage you to use Nginx and PostgreSQL. To configure MySQL in Mattermost, no changes to the SQLSetting are needed except the DataSource directive which you will need to modify to suit your username/password/database that you setup.

Once I was satisfied with the setup I migrated the directory structure from the testbed to the production server and setup the init scripts as per the mattermost documentation so it runs as a service under it’s own account. I will stress that if you do that, be sure to check the data directory directive so that the right locations are accessible. If you have any trouble with Mattermost the logs are a good place to start looking for the problem. 😉

Setting up Let’s Encrypt:

This is really a two stage process. The first stage is to setup a sub-domain using your DNS provider. I use NoIP, as I can use their client to update my dynamic IP address if  and when my internet connection drops out.

and when my internet connection drops out.

As Mattermost runs on a high port number and apache has not been configured as a reverse proxy just yet. I needed to run lets encrypt in standalone mode. In this mode, letsencrypt acts as its own http server in order to verify you have control over the domains you’re trying to create ssl certificates for. The commands I ran looked like this:

sudo service apache2 stop

sudo letsencrypt certonly –standalone -d www.warbel.net -d bel.warbel.net -d blog.warbel.net -d mattermost.warbel.net

sudo service apache2 start

Let’s encrypt recognized that I needed to add the new domain mattermost to my list of sites and updated the certificate accordingly.

Configuring Apache:

Originally I had intended to setup Mattermost as a subdirectory on my primary domain which was in keeping with my previous projects. Unfortunately that seemed impossible. In the end it was easier to setup a sub-domain and then configure apache with a new site. I had to do some serious googling to find a semi-working config. Mattermost uses web-sockets and application program interfaces (APIs) which do not play well with reverse proxies out of the box. Furthermore, as Let’s Encrypt had already reconfigured components of Apache, I had to modify what I found to match with my pre-existing sites.

I created a new site in /etc/apache2/sites-available/ called mattermost.warbel.net.conf and working off this configuration file as an example created the below:

<VirtualHost mattermost.warbel.net:80>

ServerName mattermost.warbel.net

ServerAdmin xxxx@warbel.net

ErrorLog ${APACHE_LOG_DIR}/mattermost-error.log

CustomLog ${APACHE_LOG_DIR}/mattermost-access.log combined

# Enforce HTTPS:

RewriteEngine On

RewriteCond %{HTTPS} !=on

RewriteRule ^/?(.*) https://%{SERVER_NAME}/$1 [R,L]

</VirtualHost>

<IfModule mod_ssl.c>

<VirtualHost mattermost.warbel.net:443>

SSLEngine on

ServerName mattermost.warbel.net

ServerAdmin xxx@warbel.net

ErrorLog ${APACHE_LOG_DIR}/mattermost-error.log

CustomLog ${APACHE_LOG_DIR}mattermost-access.log combined

RewriteEngine On

RewriteCond %{REQUEST_URI} ^/api/v1/websocket [NC,OR]

RewriteCond %{HTTP:UPGRADE} ^WebSocket$ [NC,OR]

RewriteCond %{HTTP:CONNECTION} ^Upgrade$ [NC]

RewriteRule .* ws://127.0.0.1:8065%{REQUEST_URI} [P,QSA,L]

RewriteCond %{DOCUMENT_ROOT}/%{REQUEST_FILENAME} !-f

RewriteRule .* http://127.0.0.1:8065%{REQUEST_URI} [P,QSA,L]

RequestHeader set X-Forwarded-Proto “https”

<Location /api/v1/websocket>

Require all granted

ProxyPassReverse ws://127.0.0.1:8065/api/v1/websocket

ProxyPassReverseCookieDomain 127.0.0.1 mattermost.warbel.net

</Location>

<Location />

Require all granted

ProxyPassReverse https://127.0.0.1:8065/

ProxyPassReverseCookieDomain 127.0.0.1 mattermost.warbel.net

</Location>

ProxyPreserveHost On

ProxyRequests Off

SSLCertificateFile /etc/letsencrypt/live/www.warbel.net/fullchain.pem

SSLCertificateKeyFile /etc/letsencrypt/live/www.warbel.net/privkey.pem

Include /etc/letsencrypt/options-ssl-apache.conf

</VirtualHost>

</IfModule>

The main difference being that the ssl virtualhost needed to be contained within the ssl module configuration. I’ll also point out that there was a typo in the last proxypassreverse directive- the url was missing the https that stopped the websites from pushing new chat messages automagically to clients connected to the server.

Enable the site with:

sudo a2ensite mattermost.warbel.net

and reload or restart the server – you should have a working Mattermost server.

MotionEye Cameras Failing

It seems that after a period of time the webcams attached to my raspberry pi fail after about 2 days of usage. While I haven’t had the time to delve into the reasons why, a fix or workaround is to have the device restart every day at 5am.

Log into the raspberry pi as the administrator and edit the crontab file with: crontab -e

Then add the following line:

0 5 * * * shutdown -hr now >/dev/null 2>&1

This will restart the device every day at 5am. It should keep working until I have the time to look through the system logs and find what the problem is. I suspect it may be power related, or just bad coding somewhere?

Migrating from VirtualBox To KVM

As written previously: There are performance benefits to be had by switching from VirtualBox to KVM. And now, after making the switch I can firmly say that not only are the performance benefits noticeable, the configuration of automatic startup and, prima facie, backups, seems to be much easier to establish and use.

As written previously: There are performance benefits to be had by switching from VirtualBox to KVM. And now, after making the switch I can firmly say that not only are the performance benefits noticeable, the configuration of automatic startup and, prima facie, backups, seems to be much easier to establish and use.

I’ve grown very fond of Oracle’s Virtualbox but given that it’s

more of a prosumer product rather than an enterprise one, it’s only fair that I learn how to use it’s bigger brother KVM.

The process of switching to KVM itself was very simple, all things considered. The process I followed, after troubleshooting the various stages worked like this:

- Stop and backup all the virtualbox VMs.

- Convert the virtual disks from a virtualbox to kvm format.

- Create the virtual machines using virt-

manager.

manager. - Removed vboxtool and configs

- Create a bridge interface on the KVM Host

- Set each of the virtual machines to use the new bridge interface to connect to the internet and local network.

- Configure each VM’s network interface to use the new network interface.

In Detail:

Stopping the machines was easy, simply ssh into them and run shutdown -h now.

Backup the machines using the clone option in VirtualBox.

On the Hypervisor, navigate to the virtual machine directory (usually /home/user/VirtualBox VMs/ and create a new disk image from the vdi files like this:

qemu-img convert -f vdi -O qcow2 VIRTUALBOX.vdi KVM.qcow2

Thanks to this website for the useful tip. At this point I moved each of my VM disks to a new separate directory. This wasn’t strictly necessary, it’s just neater!

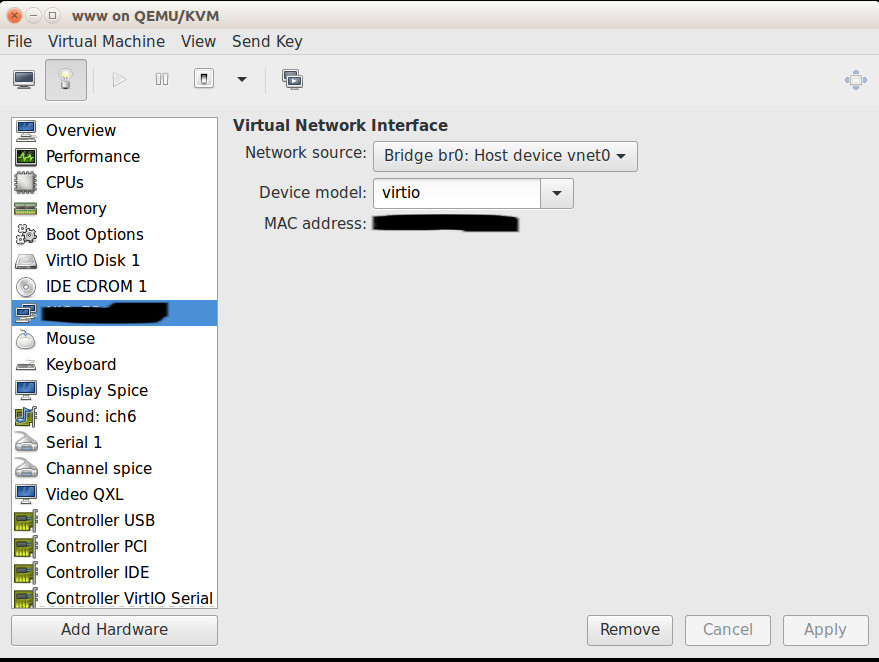

Use virt-manager to then create the virtual machines. The process is intuitive. Be sure to enable bringing up at boot. It was at this point that I ran into trouble. By default the virtual machines cannot talk to the host which is a problem if the host is also a file server. To get around this I had to modify the network config on the host. The KVM network page provided information on how to achieve this. Ultimately, you create network bridge then set each of the VMs to use that bridge. Below is my modified interfaces file on my Ubuntu 16.04 VM host:

# The primary network interface

#bridge to allow the VMs and the host to communicate

auto br0

iface br0 inet static

address 10.60.204.130

netmask 255.255.255.128

broadcast 10.60.204.255

gateway 10.60.204.129

dns-nameservers 10.60.204.133 8.8.8.8

dns-search warbelnet.local

bridge_ports enp6s0

bridge_stp off

bridge_maxwait 0

bridge_fd 0

Below is the configuration in virt-manager for the network in one of my VMs:

This was testing and working.

As my virtual machines are all running Ubuntu 16.04 the network interfaces file needed to be updated as the interface name changes after a hardware change.

Finally, I uninstalled Virtualbox, removed vboxtool (which I had been using to automatically start the Virtualbox VMs), removed vboxtool’s config from /etc/ and restarted everything to test.

Very happy to say it’s been quite a success!

Adding a New Domain and Securing it with SSL

This week my wife asked me to create for her a blog. As such I’ve had to rejig the www server to make space for her new domain.

The process is quite simple:

- Create the new user on the www server so she has sftp access.

- Create a mailbox, and mysql database for the new user.

- Create the directory structure, copy the latest wordpress to it and set file permissions.

- Create the DNS entries in my DNS provider, and locally on my home DNS

- Copy and edit my blog’s apache config files.

- Enable the new site -without SSL

- Update the Let’s Encrypt certificate files with the new domain

- Enable the SSL website.

- Configure wordpress.

In detail:

Create a new user on the web server with adduser -D

Depending on your setup, create a new mailbox, if you like and create a new database and user. I use phpmyadmin and postfixadmin for these tasks. Remember to note down the passwords and make them secure! Use a random password generator if needs must.

I created the directory structure in /var/www/bel.warbel.net/ moving forward, I think it would be more secure to have user’s websites stored in their home directories and then have the users jailed to stop access to the wider system. It would also make sense to have the sub domain match their username for simplicity’s sake. Be sure to change ownership once you’ve copied in the latest word press: chown USER:www-data /var/www/bel.warbel.net -R

WordPress (as www-data) will need write permissions on the sub directories particularly in the data directories to allow for downloading plugins and themes. Be sure to chmod g+w those directories.

At this point, if you have not done so already, create the DNS entries for your site. For me this meant updating my internal DNS records with a CNAME for bel.warbel.net to point to www.warbel.net, which I replicated on my own DNS hosts: https://www.noip.com/ who I recommend. As I do not have a static IP address, I use their dyndns services on my router.

Next, I copied the /etc/apache2/sites-available/blog.warbel.net.conf and blog.warbel.net-le-ssl.conf and renamed them to bel.warbel.net.conf and bel.warbel.net-le-ssl.conf respectively. The let’s encrypt program will, initially, not expect to see a SSL site, so I commented out the redirects in the non-ssl file and updated the config file for all the references to the hostname and root directories.

Enable the new site: a2ensite bel.warbel.net; service apache2 reload

Run the ssl certificate generator with all the domains you need:

sudo letsencrypt certonly –webroot -w /var/www/html -d www.warbel.net -w /var/www/bel.warbel.net -d bel.warbel.net -w /var/www/blog.warbel.net -d blog.warbel.net

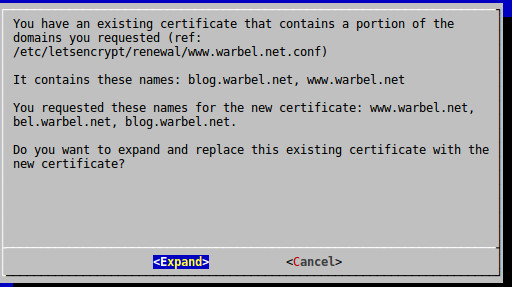

If successful, it will show you a screen, prompting you to agree to update the certificate with the new domain:

At this point, it is safe and appropriate to enable the ssl site with: a2ensite bel.warbel.net-le-ssl.conf; service apache2 reload

Be sure to edit the non-ssl site’s config and re-enable forced ssl.

Finally, configure the new wordpress site. I found that to enable uploading files (updates etc) I needed to add a line to wp-config.php:

define(‘FS_METHOD’, ‘direct’);

Migrating from Virtualbox to KVM

After doing some much needed research into virtualisation on Linux, it’s become apparent that I should migrate my virtual machines from Virtualbox to KVM. KVM has significant performance benefits and it is a solid ‘production’ system. It’s also clear that if I want to advance my technical skills in the enterprise Linux space, then I need to learn more about KVM and implement it on my systems.

I love Virtualbox because it is cross platform- I can create a VM on a Linux host, and move it to a windows host if needed. The remote desktop server built into the program, too, is a very handy feature. However I will admit, that I very rarely will spin up a VM on Linux and move it to another OS (if ever) and since discovering MobaXTerm on windows, I can now easily, from any windows machine (read: my laptops) access the virt-manager X window session of a running VM on KVM. As an aside, MobaXTerm is an amazing program and compliments putty quite nicely!

My concerns so far about the migration are 3 fold:

- I need to convert the disk images into a native format for KVM and virt-manager to use.

- I currently automate my VM startup and shutdown with VBoxTool so I will need to either find a preexisting automation solution, or create my own init scripts.

- Virtualised hardware: Clearly Virtualbox and KVM will virtualise hardware in their own ways, so I need to be sure that the machines can migrate to the new environment and still work. I’m mostly concerned with networking as experience has taught me that Linux is very forgiving of hardware changes, however with the new naming conventions of Ethernet devices, my network configs will need to be updated.

Using a Raspberry Pi as a cheap security system

A small project this weekend. I used my hitherto untouched Raspberry Pi 2 as a security system. The process is reasonably straight forward to anyone who is already familiar with the Raspberry Pi.

I have two web cams which are attached to the Pi via an external powered usb hub. This is necessary as the device does not have enough power to run itself and the cameras. It also has a USB 2.4G wireless dongle.

I’ve installed MotionEye onto the Pi’s SD card. Again, simply using:

sudo dd if=MotionEyesIMGFile of=/dev/sdX

did the job.

Once the device was setup using the wired network, it could be secured with an admin password, by default it has no password and it can be added to the wireless network. All of the settings can be accessed by clicking the menu icon in the top left hand corner, and the process is intuitive, as is adding the cameras.

The only real difficulty encountered was allowing it to function behind the reverse proxy. To do so relied on having to edit the /etc/motioneye.conf file to include the line:

base_path /security

I had tried to ssh into the device to make the changes, however the file system is set to RO by default, so I ended up removing the microSD card and editing the files on my desktop.

That then needed to be mirrored in my apache reverse config files:

ProxyPass /security http://10.60.204.xxx

ProxyPassReverse /security http://10.60.204.xxx

And done! The new security system is accessible via ssl at: https://www.warbel.net/security/

Enabling Secure SSL to Roundcube, Postfixadmin, Sonarr and Deluge with an Apache Reverse Proxy

As discussed in earlier posts I have hit the limits of what you might call ‘standard’ hosting. As I have multiple machines, both physical and virtual and only a single externally accessible IP address I needed to figure out how to allow access to certain URLs and applications on these machines without relying on NAT and port forwarding rules at the router (layer 3 routing). I also needed to do this securely using SSL.

This blog entry will outline that process that I followed to modify a significant number of applications to allow access through a single apache host that handled all incoming requests, and secures them with SSL to the client.

As my mail server already had SSL configured it needed to be disabled and go back to only accepting traffic on port 80. As all incoming traffic will be from the reverse proxy and a firewall will block all other requests, this will be secure.

Begin by creating a backup of the VM of the mail server. Then on the mail server (once running again):

Disable the SSL site:

sudo a2dissite default-ssl.conf

Disable SSL forwarding in the .htaccess file in /var/www/html/ such that the file will now look like:

#RewriteEngine On

# Redirect all HTTP traffic to HTTPS.

#RewriteCond %{HTTPS} !=on

#RewriteRule ^/?(.*) https://%{SERVER_NAME}/$1 [R,L]

# Send / to /roundcube.

#RewriteRule ^/?$ /roundcube [L]

Edit the roudcube config/var/www/html/roundcube/config/config.inc.php to stop it from forcing ssl. Look for and change the line to false like below:

$config[‘force_https’] = false;

Remove https redirect from the default config in apache and remove the servername directive:

sudo vi /etc/apache2/sites-enabled/000-default.conf

#RewriteEngine on

#RewriteCond %{SERVER_NAME} =mail.warbel.net

#RewriteRule ^ https://%{SERVER_NAME}%{REQUEST_URI} [END,QSA,R=permanent]

#ServerName mail.warbel.net

This is important as we dont want incoming request to again be forwarded.

To fix forwarding/proxing issues with Owncloud there is ample documetion available on their site. The short of it is to edit /var/www/owncloud/config/config.php to include the following lines (modify to suit):

#information included to fix reverse proxying

#https://doc.owncloud.org/server/8.2/admin_manual/configuration_server/reverse_proxy_configuration.html

‘trusted_proxies’ => [‘0.0.0.0’],

‘overwritehost’ => ‘www.warbel.net’,

‘overwriteprotocol’ => ‘https’,

‘overwritewebroot’ => ‘/owncloud’,

‘overwritecondaddr’ => ‘^00\.0\.0\.0$’,

Then enable https rewriting in the /var/www/owncloud/.htaccess file. Owncloud is smart enough to know when its being accessed via proxy.

Change Edit: RewriteCond %{HTTPS} off

to RewriteCond %{HTTPS} on

Just to be safe, unload ssl and restart apache2.

sudo a2dismod ssl; sudo service apache2 restart

Test that ssl is disabled. I discovered that I needed to clear my cache/history, as Chrome would attempt to redirect to https as per my broswer history.

I have another server that handles Deluge and Sonar. I won’t go into depth here, but if you want those applications to be accessible via a reverse proxy then stop the programs and edit their configs. In Sonarr:

Edit the config for Sonarr, /home/<user>/.config/Nzbdrone/config.xml

Edit the line <UrlBase></UrlBase> to

<UrlBase>/sonarr</UrlBase>

In Deluge, edit the conf file: /home/<user>/.config/web.conf edit the line:

“base”: “/” to

“base”: “/deluge”

Start your services again and check they’re functional.

Now the fun part. (This should work, assuming you have letsencrypt already enabled). Enable the proxy modules in apache2:

sudo a2enmod proxy proxy_html proxy_connect; sudo service apache2 restart

Edit your main site’s ssl config in /etc/apache2/sites-enabled/sitename-ssl.config to include the following (again, edit to suit):

ProxyRequests Off

ProxyPreserveHost On

<Proxy *>

Order deny,allow

Allow from all

</Proxy>

ProxyPass /sonarr http://ipaddress:8989/sonarr

ProxyPassReverse /sonarr http://ipaddress:8989/sonarr

#Location directive included to stop unauthorised access to sonarr

<Location /sonarr>

AuthType Basic

AuthName “Sonarr System”

AuthBasicProvider file

AuthUserFile “/etc/apache2/htpasswd”

Require valid-user

</Location>

ProxyPass /deluge http://ipaddress:8112/

ProxyPassReverse /deluge http://ipaddress:8112/

ProxyPass /roundcube/ http://ipaddress/roundcube/

ProxyPassReverse /roundcube/ http://ipaddress/roundcube/

Redirect permanent /roundcube /roundcube/

ProxyPass /postfixadmin http://ipaddress/postfixadmin

ProxyPassReverse /postfixadmin http://ipaddress/postfixadmin

ProxyPass /owncloud/ http://ipaddress/owncloud/

ProxyPassReverse /owncloud/ http://ipaddress/owncloud/

Redirect permanent /owncloud /owncloud/index.php

A few notes on the directives:

- As Sonarr does not have authentication, I’ve used some resources to make it secure: If necessary, generate a strong random password for apache htaccess file here: http://passwordsgenerator.net/ And use this tool to create your htaccess file contents: http://www.htaccesstools.com/htpasswd-generator/

- The redirect permanent is useful is people try to access /roundcube, which won’t work, rather than /roundcube/ which will.

At this point you should be able restart everything and it will work (at least it did for me!). Please leave any questions or comments below.

Edit: You may want to stop people inside the network, depending on your dns etc requirements from accessing the mail server on port 80. If so, the following line in your firewall should do the trick, give or take:

$IPTABLES -A INPUT -p tcp –dport 80 ! -s ip.of.www.proxy -j DROP

Summarising the Process

The goal of this project, to some extent, has been to create an environment that functions similarly to a Microsoft exchange +OWA environment using only open-source programs and in a linux environment. The solution had to be robust and scalable and similar to what a small to medium business would use. For the most part I’ve achieved that, however I’ll admit that at this point the lack of a backup solution is a concern. But we’ll put that aside for the time being (due to financial constraints).

I would expect that most end users would be able to adopt the software quickly and integrate it into their workflows. My project would cover most of the basic functions of an office environment, however clearly does not aim to replace or integrate with other software such as HP TRIM, Docushare, Objective etc. Furthermore as far as I’m aware my environment would not be able to support the integration of those programs into the mail server. I digress.

To recap: I’ve been able to create a postfix/dovecot IMAP mail server, secured with SSL. The web interface allows password on the MYSQL backend to be reset by the user. Shared calendar functions are supported by Own Cloud which also supports file sharing and limited online editing of documents. Again, all secured by true SSL thanks to let’s encrypt.

The backend also has web hosting capabilities and useful user management and administration functions. PhpMyAdmin (locked down to the local subnet), PostFixAdmin, the aforementioned Own Cloud are all installed and offer reasonable admin functions similar to to an exchange/AD environment. Any L1 technician would be able to use the backends without too much hassle.

So the final stage will be to setup two more things.

- Mailman or similar: PostFixAdmin handles mail aliases well (I would say they’re easier to create than Office365 with ADFS integration) however distribution groups are not supported.

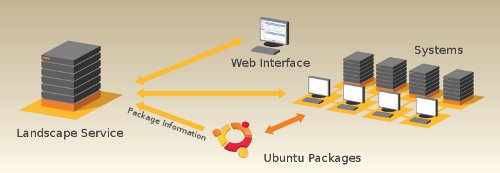

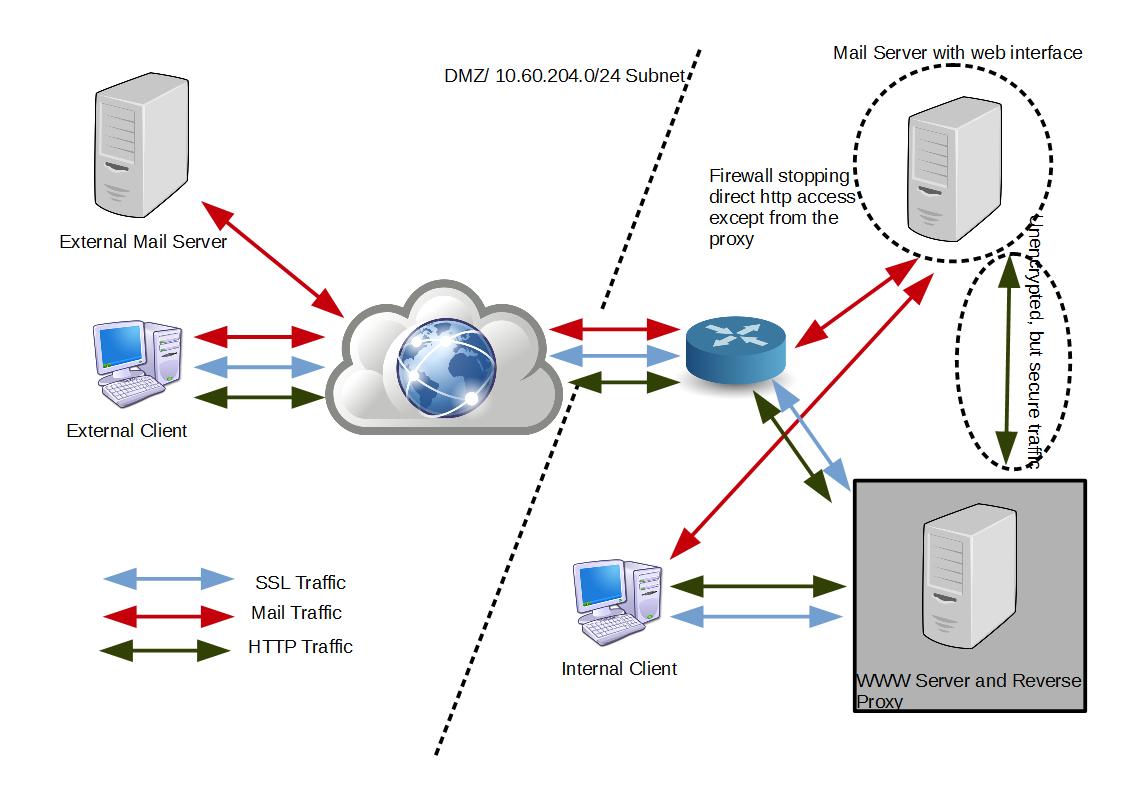

- Setting up apache on the web server to handle all incoming requests to the mail server by using the proxy modules. The picture below should demonstrate:

Fundamentally, the web server will encrypt all the incoming traffic or push clients to SSL connections. It will map the following from the mail server:

- http://mail.warbel.net/owncloud to https://www.warbel.net/owncloud

- http://mail.warbel.net/roundcube to https://www.warbel.net/roundcube

- http://mail.warbel.net/postfixadmin to https://www.warbel.net/postfixadmin

The traffice between the mail server and the www server will technically be unencrypted. Given that they’re both VMs running on the same host though, this presents a limited security hole. The mail server will also be configured to firewall off all incoming connections on port 80 and 443 that are not coming from the web server.

Next Step: Reverse Proxy with Apache2

So my next challenge which has so far been a difficult one, is to set up apache 2 as a reverse proxy. The technical challenge is that my mail server sits behind a firewall on a private network. Technically, so does my web-server. All web traffic (read http/https-80/443) is currently forwarded to my web server. It hosts two websites: blog.warbel.net and www.warbel.net – both with SSL enabled.

My mail server also runs apache and is secured in a similar fashion – all requests on port 80 are forwarded to port 443. It has a valid SSL certificate for mail.warbel.net.

To demonstrate the challenge, I have unashamedly borrowed this graphic from Atlassian:

In their example they have three internal servers with the reverse proxy in the middle, accessing the services on the private network on behalf of the client. In my scenario, the reverse proxy is also a web server in its own right, and only needs to forward SSL requests to the mail server. There are, on the web server, only two URLs that are important. https://mail.warbel.net/roundcube and https://mail.warbel.net/postfixadmin/. I would prefer that I keep the hostname mail.warbel.net intact however as a last resort, proxying the two URLs would work just as well.

Looking ahead, I can see that setting up proxying to just the sub directories will result in SSL errors – apache on mail is configured with only mail.warbel.net as the registered domain name. However I’m yet to figure out how to use apache on the web server to simply forward ssl requests to mail, rather than try and negotiate them itself.